Moores Law and Bigger Chips Again

Moore's Law is dead, right? Think once more.

Although the historical annual comeback of virtually 40% in cardinal processing unit operation is slowing, the combination of CPUs packaged with alternative processors is improving at a rate of more 100% per annum. These unprecedented and massive improvements in processing power combined with data and artificial intelligence will completely change the way we think about designing hardware, writing software and applying engineering to businesses.

Every industry volition be disrupted. You hear that all the time. Well, it's absolutely truthful and we're going to explain why and what it all ways.

In this Breaking Assay, we're going to unveil some data that suggests we're inbound a new era of innovation where inexpensive processing capabilities volition power an explosion of machine intelligence applications. We'll likewise tell you what new bottlenecks will sally and what this means for system architectures and industry transformations in the coming decade.

Is Moore's Police force really dead?

Nosotros've heard it hundreds of times in the past decade. EE Times has written nigh information technology, MIT Engineering science Review, CNET, SiliconANGLE and even industry associations that marched to the cadency of Moore's Law. Merely our friend and colleague Patrick Moorhead got information technology correct when he said:

Moore'due south Police, by the strictest definition of doubling scrap densities every two years, isn't happening anymore.

And that's true. He'southward absolutely right. Notwithstanding, he couched that statement maxim "past the strictest definition" for a reason… because he's smart enough to know that the bit industry are masters at figuring out workarounds.

Historical performance curves are being shattered

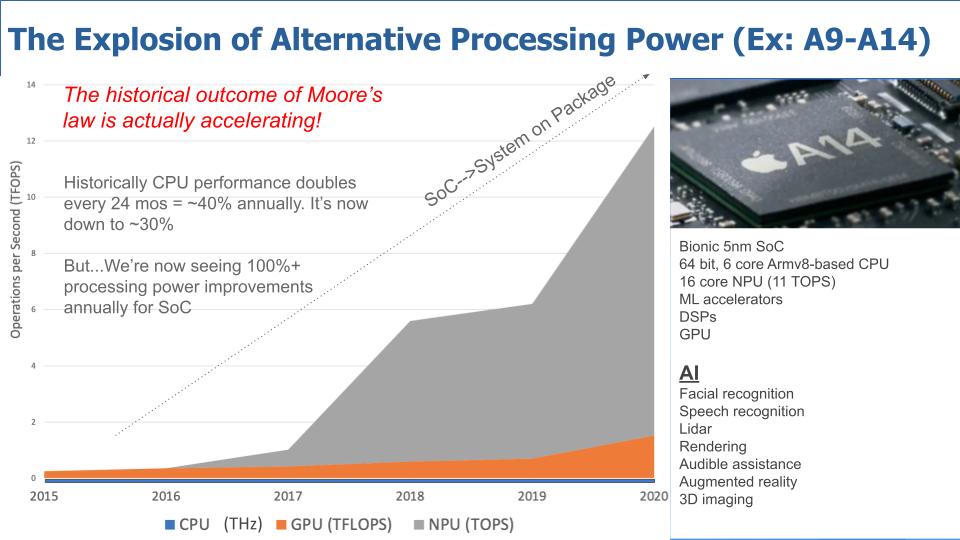

The graphic beneath is proof that the death of Moore's Law by its strictest definition is irrelevant.

The fact is that the historical outcome of Moore'southward Constabulary is actually accelerating, quite dramatically. This graphic digs into the progression of Apple Inc.'southward organisation-on-chip developments from the A9 and culminating in the A14 five-nanometer Bionic organisation on a bit.

The vertical axis shows operations per second and and the horizontal axis shows time for three processor types. The CPU, measured in terahertz (the blue line which you can hardly encounter); the graphics processing unit or GPU, measured in trillions of floating indicate operations per 2d (orangish); and the neural processing unit or NPU, measured in trillions of operations per second (the exploding gray area).

Many folks will call back that historically, we rushed out to purchase the latest and greatest personal computer because the newer models had faster bike times, that is, more gigahertz. The outcome of Moore's Police force was that performance would double every 24 months or virtually xl% annually. CPU functioning improvements have now slowed to roughly xxx% annually, then technically speaking, Moore'south Law is expressionless.

Apple's SoC performance shatters the norm

Combined, the improvements in Apple'south SoC since 2022 have been on a pace that'due south higher than 118% annual improvement. Really it'south college because 118% is the actual figure for these 3 processor types shown above. In the graphic, we're not even counting the impact of the digital signal processors and accelerator components of the organization, which would push button this college.

Apple'due south A14 shown above on the correct is quite amazing with its 64-bit compages, multiple cores and alternative processor types. Simply the important affair is what y'all can do with all this processing power – in an iPhone! The types of AI continue to evolve from facial recognition to voice communication and natural linguistic communication processing, rendering videos, helping the hearing impaired and eventually bringing augmented reality to the palm of your hand.

Quite incredible.

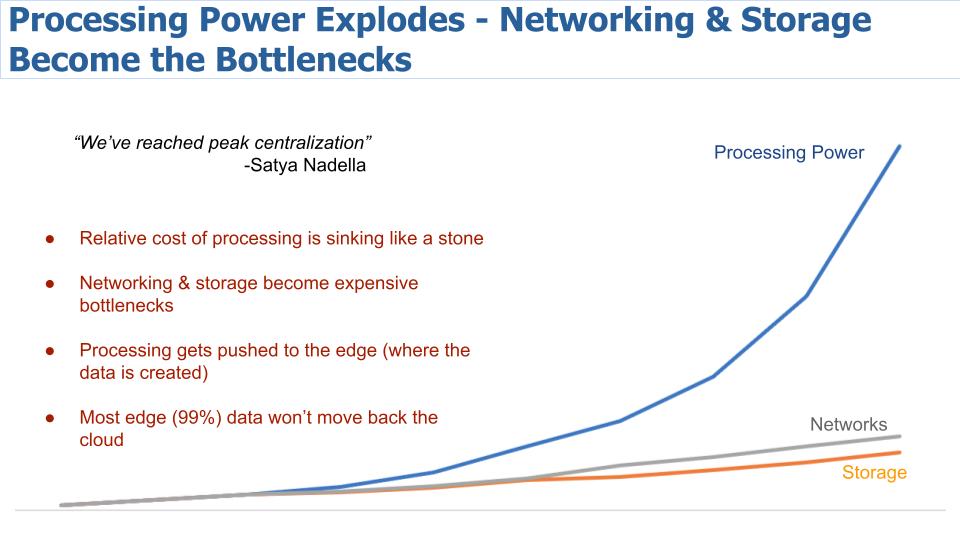

Processing goes to the edge – networks and storage get the bottlenecks

Nosotros recently reported Microsoft Corp. Chief Executive Satya Nadella's ballsy quote that we've reached peak centralization. The graphic beneath paints a moving-picture show that is telling. We just shared above that processing power is accelerating at unprecedented rates. And costs are dropping like a stone. Apple tree's A14 costs the company $50 per chip. Arm at its v9 announcement said that it volition accept chips that can go into refrigerators that will optimize energy use and salvage 10% annually on ability consumption. They said that chip will cost $ane — a buck to shave 10% off your electricity bill from the refrigerator.

Processing is plentiful and inexpensive. But look at where the expensive bottlenecks are: networks and storage. So what does this mean?

Information technology ways that processing is going to get pushed to the edge – wherever the information is born. Storage and networking will become increasingly distributed and decentralized. With custom silicon and processing power placed throughout the arrangement with AI embedded to optimize workloads for latency, performance, bandwidth, security and other dimensions of value.

And remember, nigh of the data – 99% – will stay at the edge. Nosotros like to utilize Tesla Inc. as an example. The vast majority of data a Tesla motorcar creates volition never go back to the cloud. It doesn't even get persisted. Tesla saves perhaps v minutes of data. Merely some data will connect occasionally back to the cloud to train AI models – we'll come dorsum to that.

But this picture show to a higher place says if you're a hardware visitor, you lot'd better get-go thinking about how to have advantage of that blue line, the explosion of processing power. Dell Technologies Inc., Hewlett Packard Enterprise Co., Pure Storage Inc., NetApp Inc. and the like are either going to start designing custom silicon or they're going to be disrupted, in our view. Amazon Spider web Services Inc., Google LLC and Microsoft are all doing it for a reason, every bit are Cisco Systems Inc. and IBM Corp.. Equally cloud consultant Sarbjeet Johal has said, "this is not your grandfather's semiconductor business."

And if you lot're a software engineer, you lot're going to be writing applications that take reward of of all the information being collected and bringing to behave this immense processing power to create new capabilities like we've never seen earlier.

AI everywhere

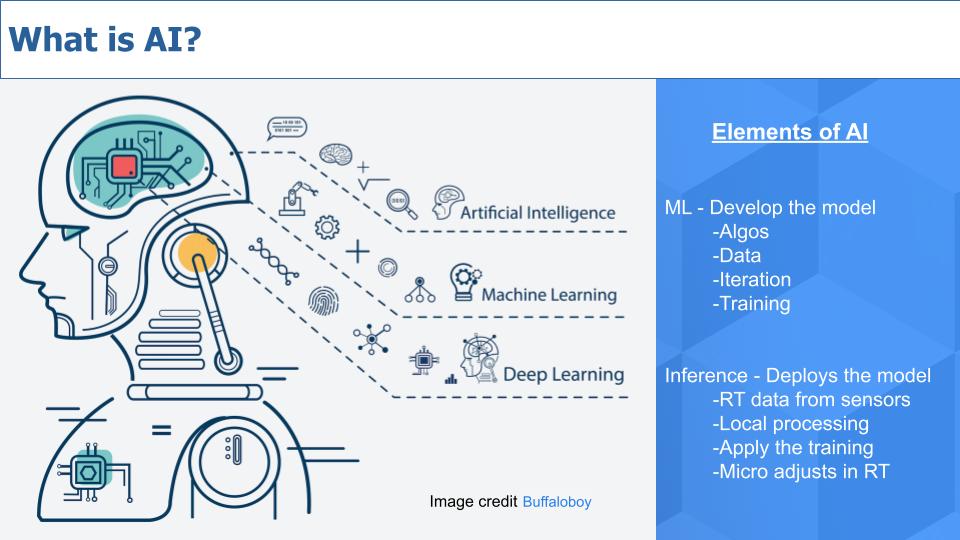

Massive increases in processing ability and cheap silicon will ability the next moving ridge of AI, motorcar intelligence, machine learning and deep learning.

Nosotros sometimes apply artificial intelligence and machine intelligence interchangeably. This notion comes from our collaborations with author David Moschella. Interestingly, in his book "Seeing Digital," Moschella says "there's nothing artificial" about this:

At that place's nothing bogus about machine intelligence but similar at that place's zippo artificial about the force of a tractor.

It's a nuance, only precise language can often bring clarity. Nosotros hear a lot about machine learning and deep learning and call up of them as subsets of AI. Machine learning applies algorithms and code to data to get "smarter" – make better models, for example, that tin can pb to augmented intelligence and better decisions by humans, or machines. These models improve equally they get more than data and iterate over time.

Deep learning is a more advanced type of automobile learning that uses more circuitous math.

The right side of the chart above shows the 2 broad elements of AI. The point we want to make here is that much of the action in AI today is focused on building and grooming models. And this is more often than not happening in the deject. Just we call back AI inference will bring the well-nigh exciting innovations in the coming years.

AI inference unlocks huge value

Inference is the deployment of the model, taking real-fourth dimension data from sensors, processing data locally, applying the preparation that has been developed in the cloud and making micro-adjustments in real time.

Permit's take an example. We love car examples and observing Tesla is instructive and a good model as to how the edge may evolve. And then think about an algorithm that optimizes the functioning and safety of a car on a turn. The model takes inputs with information on friction, route weather condition, angles of the tires, tire wear, tire force per unit area and the like. And the model builders keep testing and adding data and iterating the model until it'south ready to exist deployed.

Then the intelligence from this model goes into an inference engine, which is a flake running software, that goes into a car and gets data from sensors and makes micro adjustments in real time on steering and braking and the like. Now as nosotros said before, Tesla persists the data for a very short period of fourth dimension because there's and then much data. Just information technology can choose to shop certain information selectively if needed to transport back to the cloud and further railroad train the model. For example, if an animal runs into the road during slick weather condition, possibly Tesla persists that information snapshot, sends it dorsum to the cloud, combines it with other information and further perfects the model to meliorate safety.

This is but one example of thousands of AI inference use cases that will further develop in the coming decade.

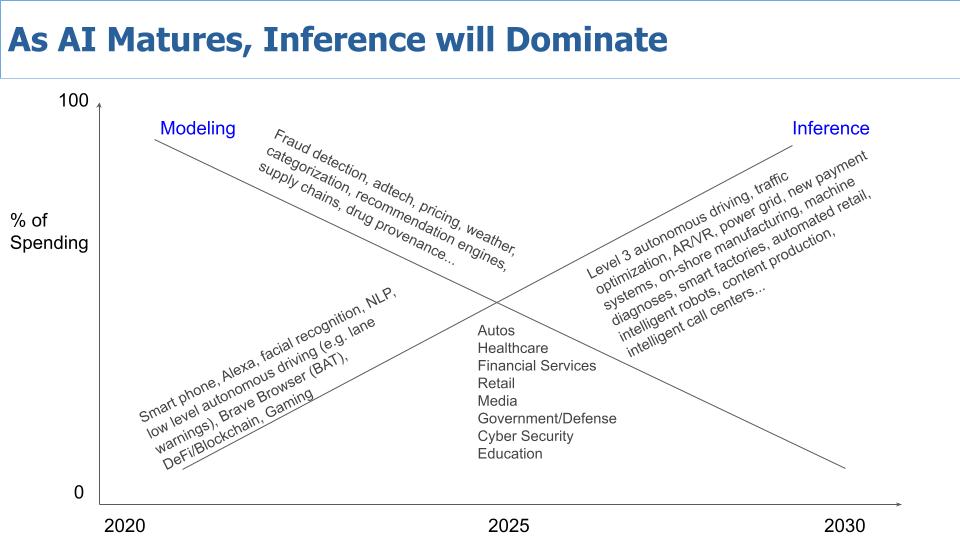

AI value shifts from modeling to inferencing

This conceptual chart below shows per centum of spend over time on modeling versus inference. And you can see some of the applications that get attention today and how these apps will mature over time every bit inference becomes more than mainstream. The opportunities for AI inference at the border and in the "cyberspace of things" are enormous.

Modeling will continue to be important. Today's prevalent modeling workloads in fraud, adtech, weather, pricing, recommendation engines and more will simply proceed getting better and meliorate. But inference, nosotros retrieve, is where the rubber meets the road, as shown in the previous example.

And in the middle of the graphic we show the industries, which will all be transformed by these trends.

One other bespeak on that: Moschella in his volume explains why historically, vertical industries remained pretty stovepiped from each other. They each had their own "stack" of production, supply, logistics, sales, marketing, service, fulfillment and the similar. And expertise tended to reside and stay within that industry and companies, for the most office, stuck to their corresponding swim lanes.

But today we see so many examples of tech giants entering other industries. Amazon entering grocery, media and healthcare, Apple in finance and EV, Tesla eyeing insurance: There are many examples of tech giants crossing traditional industry boundaries and the enabler is data. Car manufacturers over time will have meliorate data than insurance companies for case. DeFi or decentralized finance or platforms using the blockchain will continue to better with AI and disrupt traditional payment systems — and on and on.

Hence nosotros believe the oft-repeated bromide that no industry is safety from disruption.

Snapshot of AI in the enterprise

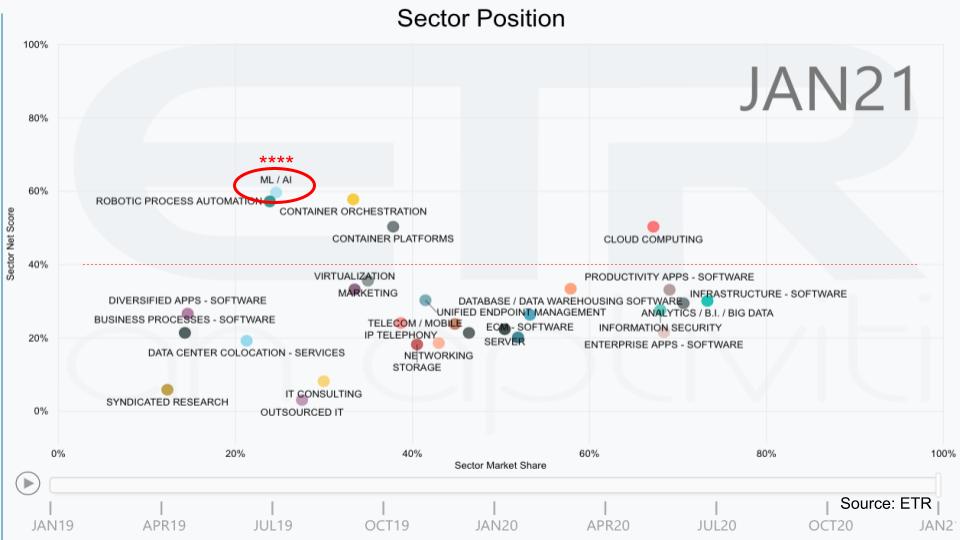

Concluding week we showed y'all the chart beneath from Enterprise Engineering Research.

This is information shows on the vertical axis Internet Score or spending momentum. The horizontal centrality is Market Share or pervasiveness in the ETR data set. The blood-red line at xl% is our subjective anchor; anything nearly 40% is actually good in our view.

Machine learning and AI are the No. one area of spending velocity and has been for a while, hence the iv stars. Robotic process automation is increasingly an adjacency to AI and you could argue cloud is where all the machine learning action is taking place today and is some other adjacency, although we think AI continues to motion out of the cloud for the reasons we simply described.

Enterprise AI specialists carve out positions

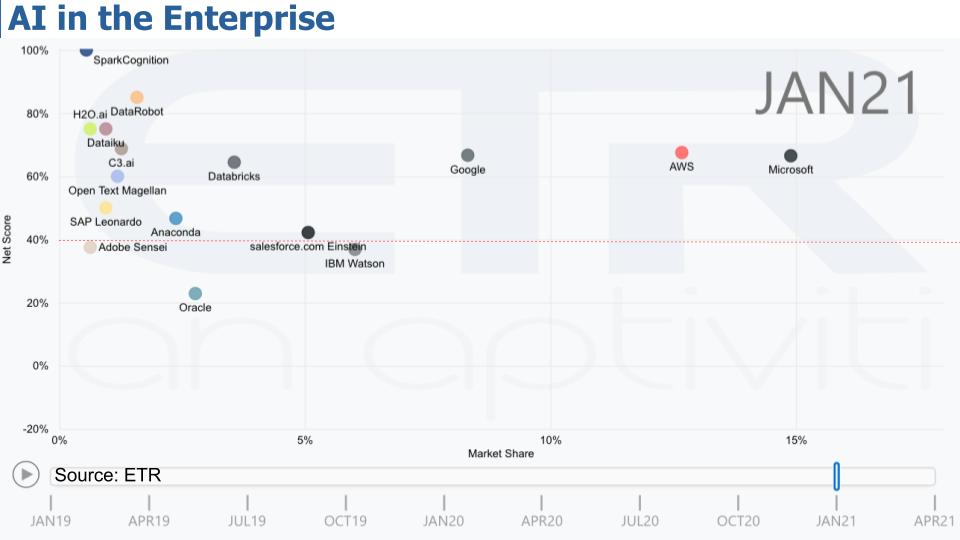

The chart below shows some of the vendors in the space that are gaining traction. These are the companies main information officers and information engineering science buyers associate with their AI/ML spend.

This graph above uses the same Y/Ten coordinates – Spending Velocity on the vertical by Marketplace Share on the horizontal axis, same twoscore% red line.

The big cloud players, Microsoft, AWS and Google, dominate AI and ML with the near presence. They have the tooling and the data. As we said, lots of modeling is going on in the deject, but this will be pushed into remote AI inference engines that will have massive processing capabilities collectively. We are moving abroad from meridian centralization and this presents nifty opportunities to create value and apply AI to industry.

Databricks Inc. is seen as an AI leader and stands out with a strong Net Score and a prominent Market Share. SparkCognition Inc. is off the charts in the upper left with an extremely high Net Score albeit from a small sample. The company applies automobile learning to massive data sets. DataRobot Inc. does automated AI – they're super high on the Y centrality. Dataiku Inc. helps create automobile learning-based apps. C3.ai Inc. is an enterprise AI visitor founded and run by Tom Siebel. Yous see SAP SE, Salesforce.com Inc. and IBM Watson just at the 40% line. Oracle is also in the mix with its autonomous database capabilities and Adobe Inc. shows also.

The point is that these software companies are all embedding AI into their offerings. And incumbent companies that are trying not to get disrupted can purchase AI from software companies. They don't have to build it themselves. The hard part is how and where to apply AI. And the simple reply is: Follow the information.

Cardinal takeaways

In that location'southward so much more to this story, but let's leave it there for now and summarize.

Nosotros've been pounding the tabular array near the post-x86 era, the importance of volume in terms of lowering the costs of semiconductor product, and today we've quantified something that nosotros oasis't really seen much of and that's the actual performance improvements we're seeing in processing today. Forget Moore's Law being dead – that's irrelevant. The original premise is being blown abroad this decade past SoC and the coming system on package designs. Who knows with quantum calculating what the future holds in terms of operation increases.

These trends are a primal enabler of AI applications and as is most often the example, the innovation is coming from consumer use cases; Apple tree continues to lead the way. Apple's integrated hardware and software arroyo will increasingly move to the enterprise mindset. Clearly the deject vendors are moving in that direction. You run into it with Oracle Corp. as well. It just makes sense that optimizing hardware and software together will gain momentum because there's so much opportunity for customization in fries as we discussed final calendar week with Arm Ltd.'s announcement – and information technology's the direction new CEO Pat Gelsinger is taking Intel Corp.

One aside – Gelsinger may face massive challenges with Intel, but he's right on that semiconductor demand is increasing and there'south no end in sight.

If yous're an enterprise, y'all should non stress virtually inventing AI. Rather, your focus should be on understanding what data gives you competitive reward and how to apply machine intelligence and AI to win. Yous'll buy, not build AI.

Information, as John Furrier has said many times, is becoming the new development kit. He said that ten years ago and it's more than true now than ever before:

Data is the new development kit.

If you're an enterprise hardware player, you will exist designing your own fries and writing more software to exploit AI. Yous'll be embedding custom silicon and AI throughout your product portfolio and you'll be increasingly bringing compute to data. Data volition mostly stay where it'south created. Systems, storage and networking stacks are all being disrupted.

If you programmer software, you now accept processing capabilities in the palm of your hands that are incredible and you lot're going to write new applications to have advantage of this and use AI to change the earth. You lot'll have to figure out how to get access to the almost relevant data, secure your platforms and innovate.

And finally, if you lot're a services company you have opportunities to help companies trying not to be disrupted. These are many. You accept the deep industry expertise and horizontal technology chops to help customers survive and thrive.

Privacy? AI for good? Those are whole topics on their ain, extensively covered by journalists. We think for now it'due south prudent to proceeds a better understanding of how far AI can go earlier we determine how far information technology should go and how it should be regulated. Protecting our personal information and privacy should be something that we most definitely care for – but generally we'd rather not stifle innovation at this point.

Keep in touch

Remember these episodes are all available as podcasts wherever you heed. Email david.vellante@siliconangle.com, DM @dvellante on Twitter and comment on our LinkedIn posts.

As well, check out this ETR Tutorial we created, which explains the spending methodology in more than detail. Note: ETR is a split company from Wikibon/SiliconANGLE. If you would like to cite or republish any of the company's information, or inquire about its services, please contact ETR at legal@etr.ai.

Hither'southward the full video analysis:

Paradigm: BuffaloBoy

Source: https://siliconangle.com/2021/04/10/new-era-innovation-moores-law-not-dead-ai-ready-explode/

0 Response to "Moores Law and Bigger Chips Again"

Kommentar veröffentlichen